How Much Better is OpenAI’s Newest GPT-3 Model?

On November 28th, OpenAI released a new addition to the GPT-3 model family: davinci-003. This latest model builds on InstructGPT, using reinforcement learning with human feedback to better align language models with human instructions. Unlike davinci-002, which uses supervised fine-tuning on human-written demonstrations and highly scored model samples to improve generation quality, davinci-003 is a true reinforcement learning with human feedback (RLHF) model. It is trained with PPO to optimize the generated text’s score against a separate “reward model”, which is trained on rating comparisons by human graders of different model outputs. More details can be found in OpenAI’s model index for researchers. The net result is that davinci-003 is tuned to produce outputs that it thinks would be scored highly by humans. Scale is proud to partner with OpenAI to provide this human feedback.

OpenAI’s announcement email mentions the following improvements for davinci-003:

- “It produces higher quality writing. This will help your applications deliver clearer, more engaging, and more compelling content.

- It can handle more complex instructions, meaning you can get even more creative with how you make use of its capabilities now.

- It’s better at longer form content generation, allowing you to take on tasks that would have previously been too difficult to achieve.”

But how much better is davinci-003, really? We decided to put it to the test quantitatively. Using Scale Spellbook, the platform to build, compare and deploy large language model apps, we evaluated davinci-003 versus davinci-002 across tasks ranging from few- and zero-shot classification, summarization, and poetry writing. Here’s what we found.

Classification

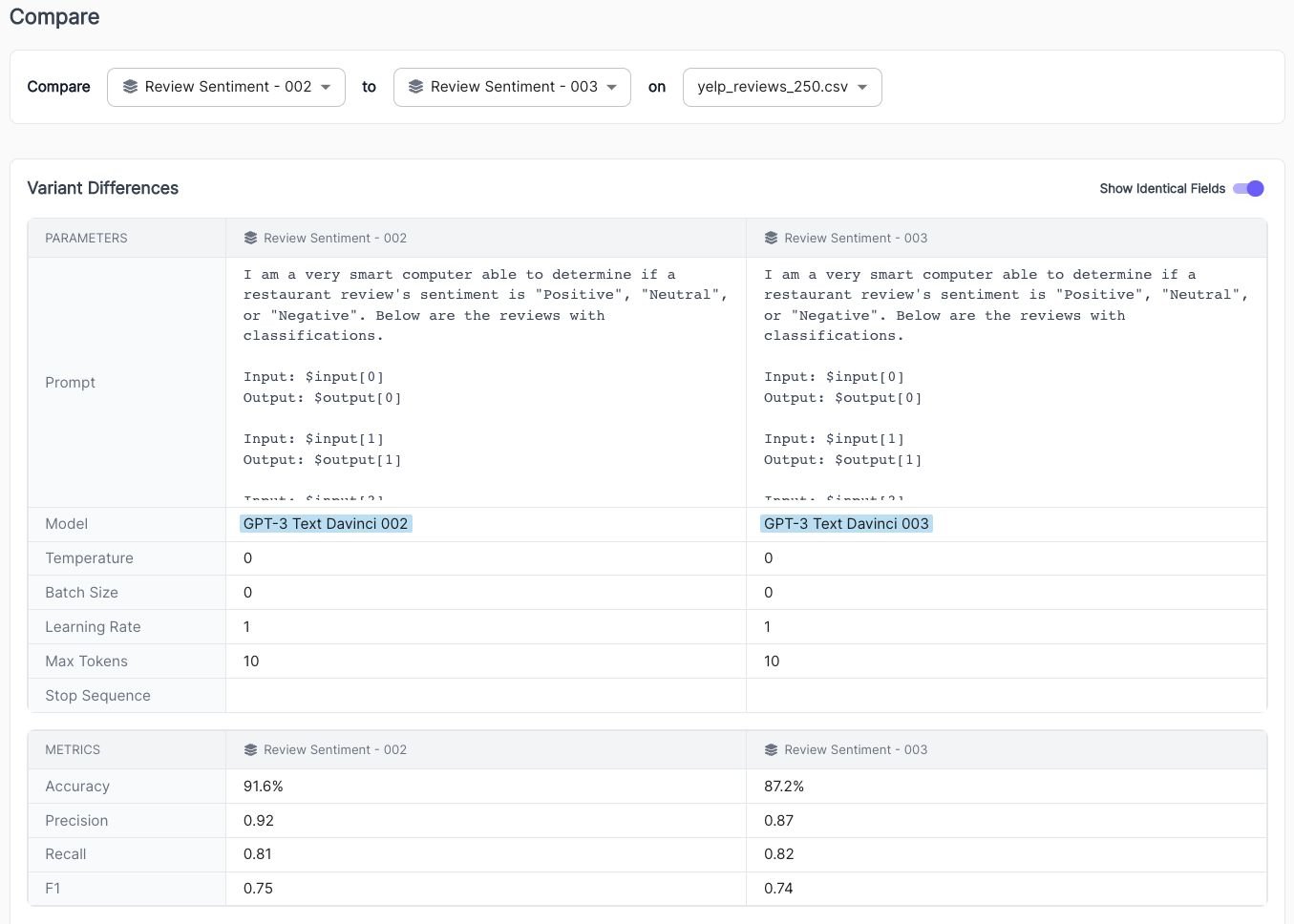

It’s well known that davinci-002 performs very well on classification with a few-shot prompt, and it appears that davinci-003 offers comparable (but slightly worse) performance few-shot. Using Spellbook’s built-in evaluation feature on 250 Yelp reviews, we compare the two models’ classification of these reviews as Positive, Negative, or Neutral in a four-shot prompt—meaning four labeled examples are included in the prompt itself.

The davinci-003 model has 87.2% accuracy on this 250 Yelp review dataset sample, slightly lower than davinci-002’s 91.6%.

However, if we ask the two models to classify these same reviews in a zero-shot prompt, we find that davinci-003 scores more than 30% higher in accuracy than davinci-002—and on par with a davinci-002 few-shot prompt! This is a substantial improvement in zero-shot classification performance. In this classification setting, adding examples to the prompt actually lowers davinci-003’s accuracy.

Beyond accuracy, we found that asking davinci-003 to explain its rationale in a zero-shot prompt works reliably, and provides a better explanation than a one-shot davinci-002 about 75% of the time, based on Scale’s human evaluation workforce judging a sample of 100 outputs.

Text Generation

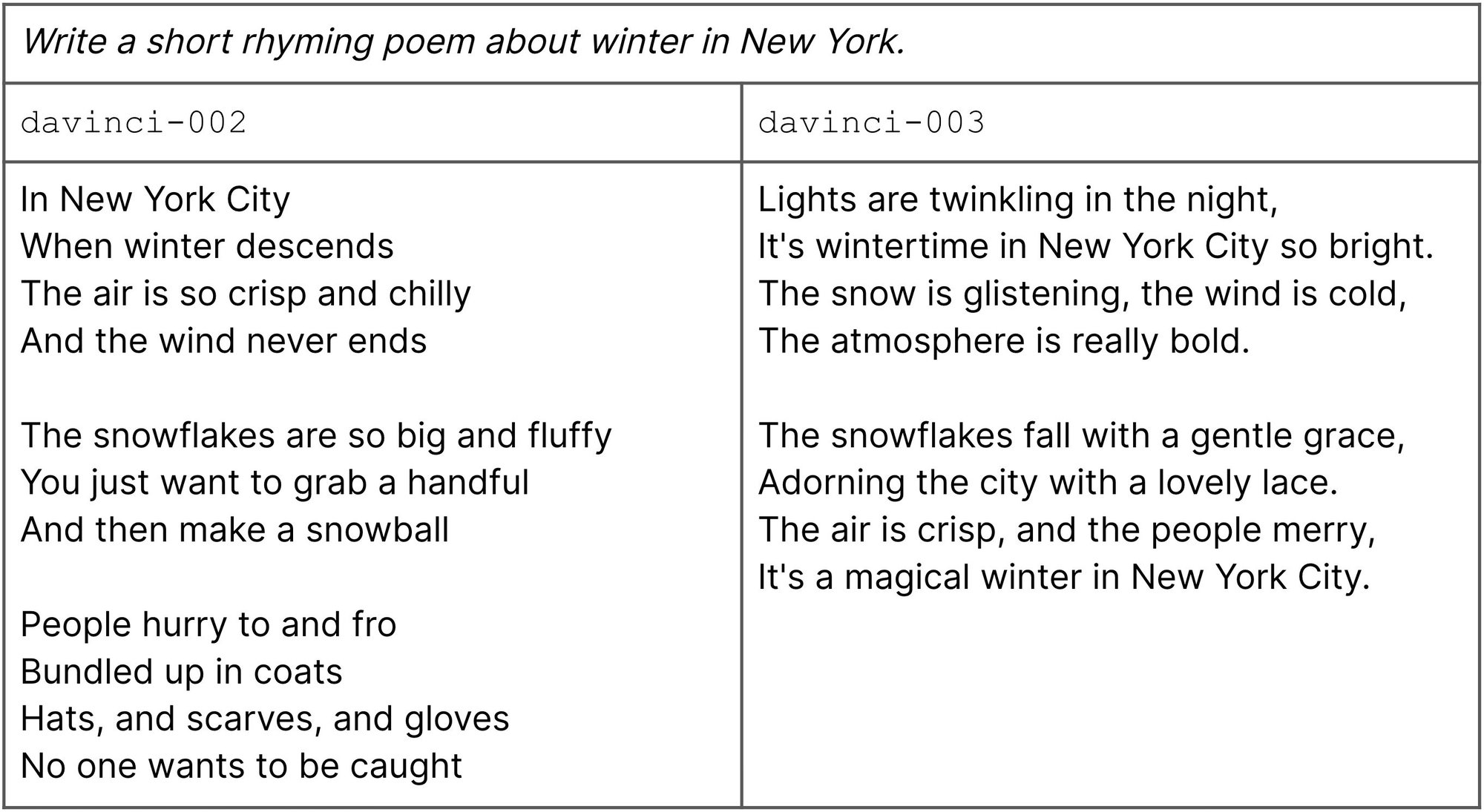

The most notable performance leap comes in how davinci-003 understands word and sentence structure, especially rhyming and syllables. In prompting for a poem that rhymes, davinci-003 constructs a desired output, whereas davinci-002 largely fails to implement rhyming.

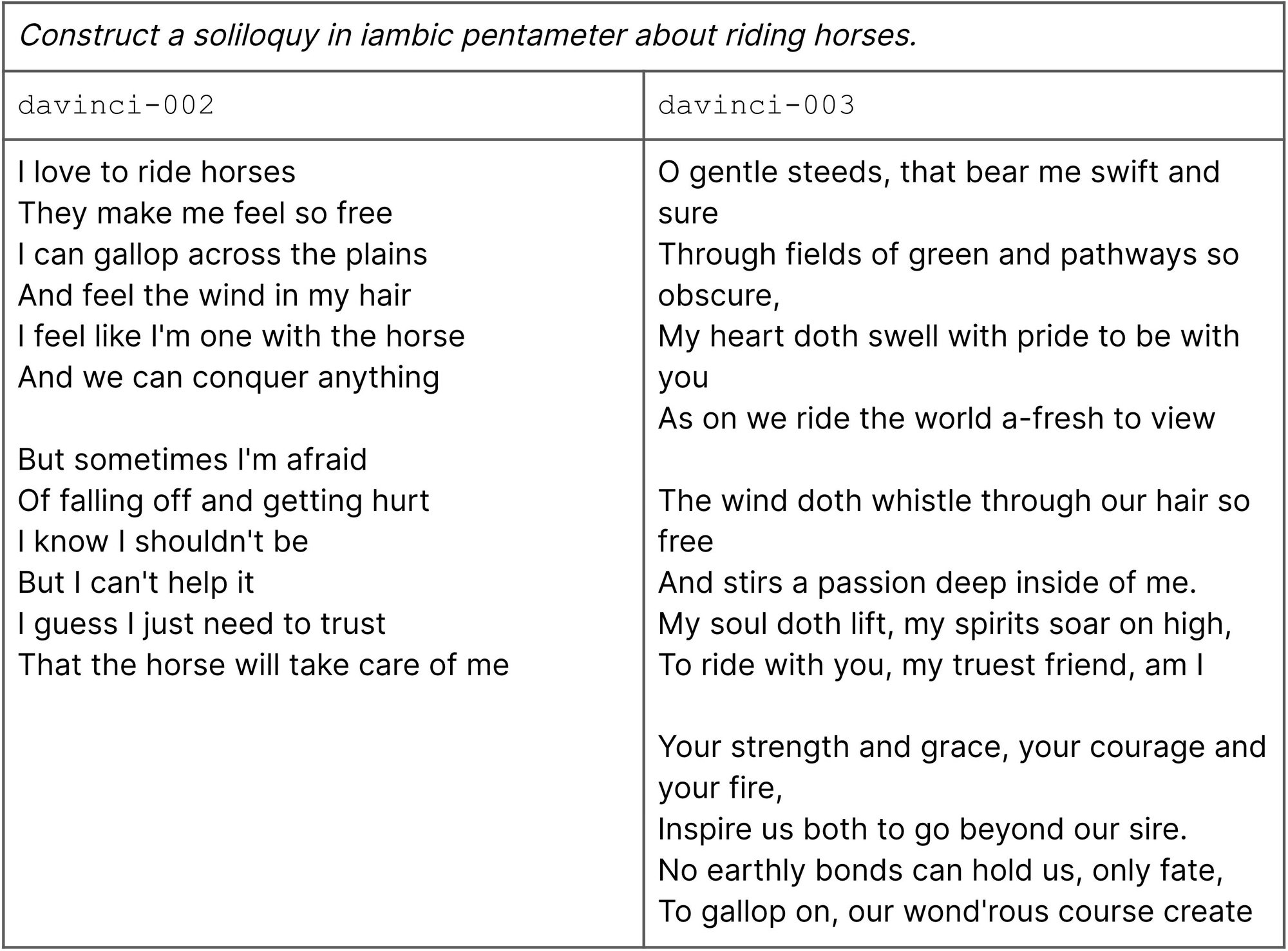

davinci-003 is also better equipped to handle requests for complex output structures, such as iambic pentameter (think Shakespeare). davinci-002 certainly provides a poem, but ignores the request for lines of 5 pairs of stressed and unstressed syllables (buh-BUM). While not perfect, davinci-003’s output is significantly more faithful to the requested prompt.

Summarization

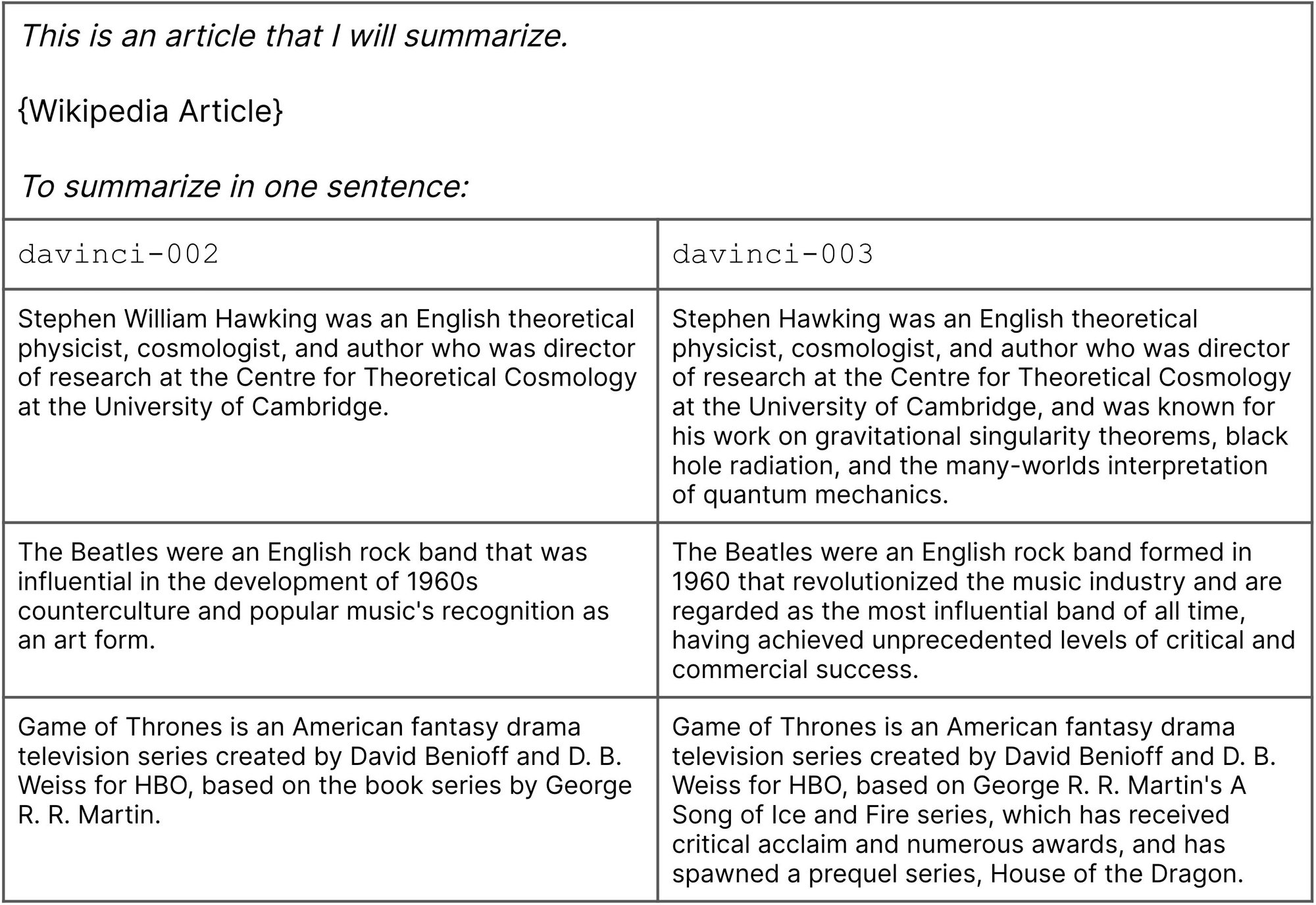

One practical difference between the two variants is verbosity. In our experimentation, davinci-003 consistently generates longer outputs than davinci-002 on the same prompts. This is most visible in summarization tasks, where the value of the result may depend on its brevity.

In a sample of 60 articles, we tested both variants’ summarization capabilities by asking them to summarize the introductory paragraphs of lengthy Wikipedia pages. The additional text generated by davinci-003 is typically beneficial, including information central to an article’s importance. However, the resulting summaries are also wordy. In our analysis, davinci-003 generates outputs about 65% longer than davinci-002 under identical prompts.

It will be important for GPT-3 users to bear in mind davinci-003’s talkativeness when choosing between the two variants. In our testing, given the task of generating a 1-sentence summary, davinci-003 produced 42 words on average, nearly doubling davinci-002‘s average of 23. The shortest summary davinci-003 gave back was a beefy 32 words, compared to only 12 words for davinci-002. This tendency extends to multi-sentence summaries as well.

For use cases that value brevity, davinci-002 is the safer choice. Nevertheless, for many use cases, the longer and more detailed completions provided by davinci-003 can be beneficial.

Conclusion

Overall, davinci-003 is a meaningful improvement from davinci-002, especially for zero-shot classification and complex text generation.

While many of the benefits of the latest iteration of GPT-3 are apparent at the surface, concrete evaluation metrics ensure models and prompt strategies are performing as well as possible.

Luckily, Spellbook makes that easy, allowing users to run instant programmatic evaluation in classification use cases, or sending model outputs to Scale’s high-quality workforce to get quantitative evaluation metrics for generative use cases.

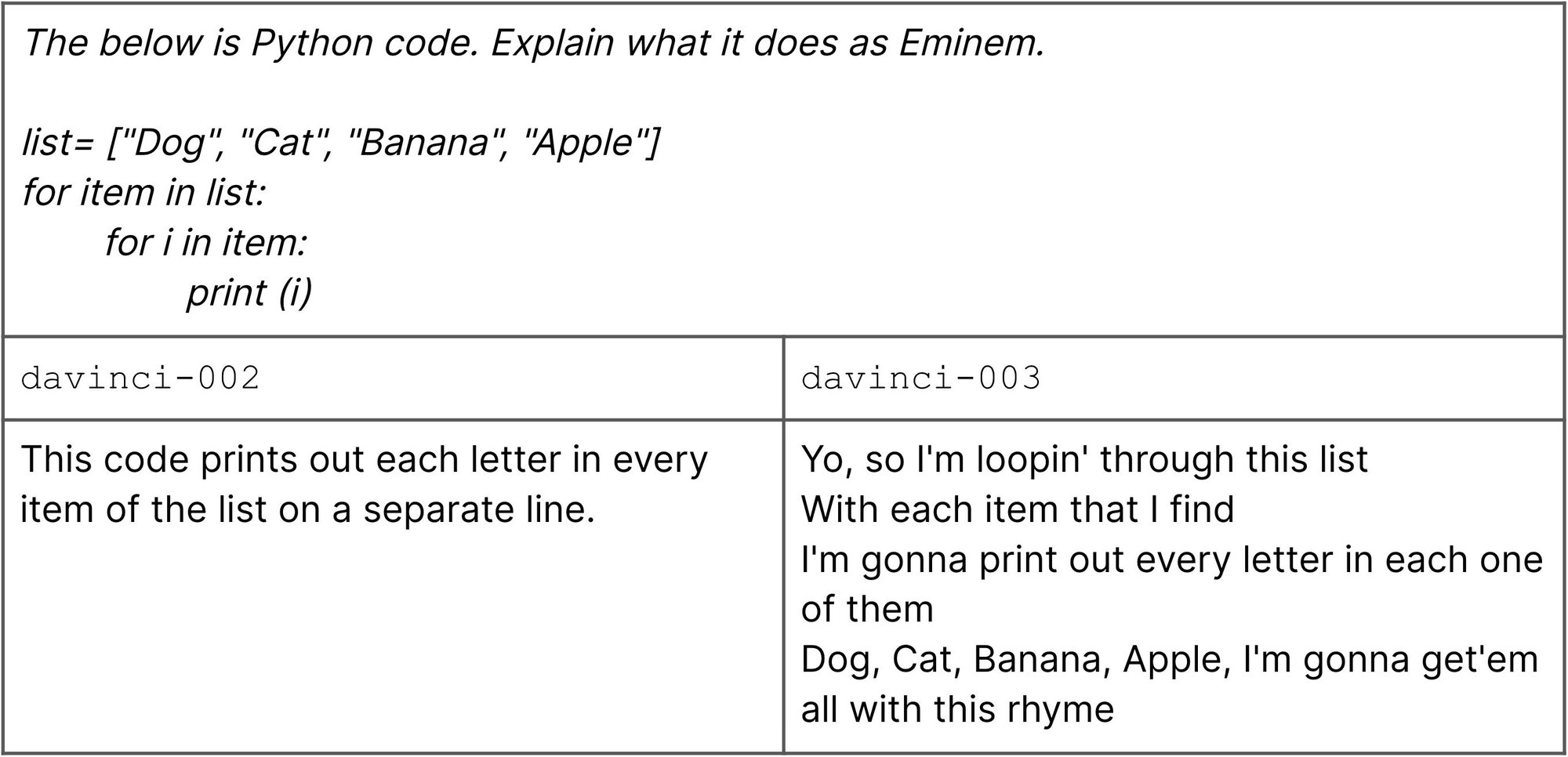

P.S. If you’re looking for a little dramatic flair in your emails to coworkers, davinci-003 has you covered. It performs significantly better at creatively translating text or even explaining code as different celebrities in a zero-shot setting.

BranchCommitmaintestmaintest

Write a short rhyming poem about winter in New York davinci-002 davinci-003

In New York City When winter descends

The air is so crisp and chilly

And the wind never ends

The snowflakes are so big and fluffy

You just want to grab a handful

And then make a snowball

People hurry to and fro

Bundled up in coats

Hats, and scarves, and gloves

No one wants to be caught

Lights are twinkling in the night, It's wintertime in New York City so bright.

The snow is glistening, the wind is cold,

The atmosphere is really bold.

The snowflakes fall with a gentle grace,

Adorning the city with a lovely lace.

The air is crisp, and the people merry,

It's a magical winter in New York City.

Text Generation

The most notable performance leap comes in how davinci-003 understands word and sentence structure, especially rhyming and syllables. In prompting for a poem that rhymes, davinci-003 constructs a desired output, whereas davinci-002 largely fails to implement rhyming.